Where orchestration lives

Frameworks differ on who owns the orchestration: the app (orchestration frameworks) or the agent (agent sdks)?

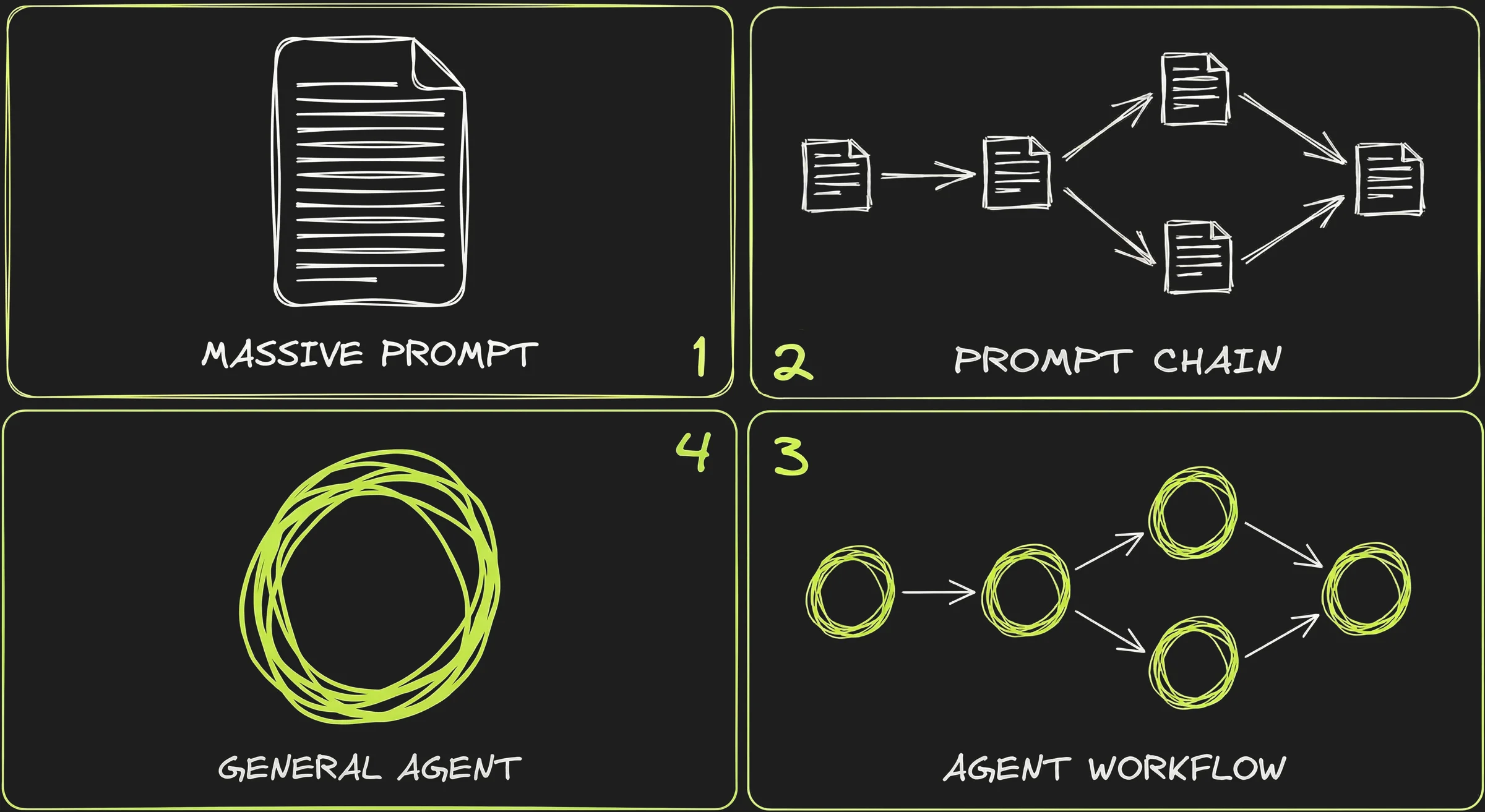

From prompting to agents

1️⃣ The Massive Prompt

At first people would cram all the instructions, context, examples, and output format into a single call and hope the LLM would get it right in one pass.

This was brittle:

- LLMs were unreliable on tasks that require multiple steps or intermediate reasoning.

- Long prompts produced less predictable output: some parts of the prompt would get overlooked or confuse the model. The longer the prompt, the less consistent the results over multiple runs.

- Long prompts were easy to break: even small changes could alter dramatically the behaviour.

2️⃣ The Prompt Chain

Getting better results meant breaking things down. Instead of one monolithic prompt, you split the task into smaller steps — each with its own prompt, its own expected output, and its own validation logic. The output of step 1 feeds into step 2, and so on.

With prompt chaining each step has a narrow, well-defined responsibility.

3️⃣ The Workflow

Once you add tool calling, each step in the chain can now do real work — query a database, search the web, validate data against an API. The chain becomes a workflow: a sequence of steps implementing the agentic loop, connected by routing logic.

4️⃣ The "General Agent"

With better models, another option emerged: instead of defining the workflow step by step, give the agent tools and a goal, and let it figure out the steps on its own.

We are somewhat back to 1️⃣ — one prompt, one call — but with the addition of tool calling and much better (thinking) models. This is agent-driven control flow, and it coexists with workflows rather than replacing them.

An "orchestration" definition

Whether you define the workflow yourself (steps 2️⃣ and 3️⃣) or let the agent figure it out (step 4️⃣), someone has to decide the structure — the sequence of actions that leads to the outcome. That's what orchestration means.

Orchestration is the logic that structures the flow: the sequence of steps, the transitions between them, and how the next step is determined.

This section focuses on the question: who owns that logic? who owns the control flow?

- App-driven control flow: the logic is decided by the developer and "physically constrained" through code.

- Agent-driven control flow: the logic is suggested by the developer and it is left to the LLM / agent to follow the instructions.

App-driven control flow

Within the app-driven control flow, the app owns the state machine:

- The developer defines the graph: the nodes (steps), the edges (transitions), the routing logic.

- The LLM is a component called within each step but the app enforces the flow defined by the developer.

Anthropic's "Building Effective Agents" blog post catalogs several variants of app-driven control flow:

- Prompt chaining — each LLM call processes the output of the previous one.

- Routing — an LLM classifies an input and directs it to a specialized follow-up.

- Parallelization — LLMs work simultaneously on subtasks, outputs are aggregated.

- Orchestrator-workers — a central LLM breaks down tasks and delegates to workers.

- Evaluator-optimizer — one LLM generates, another evaluates, in a loop.

Orchestration frameworks provide the infrastructure for building these workflows. They abstract the plumbing so that developers can focus on the workflow logic. More specifically they handle:

- Parsing tool calls, feeding results back into the next model call.

- Stop conditions, error handling, retries, timeouts.

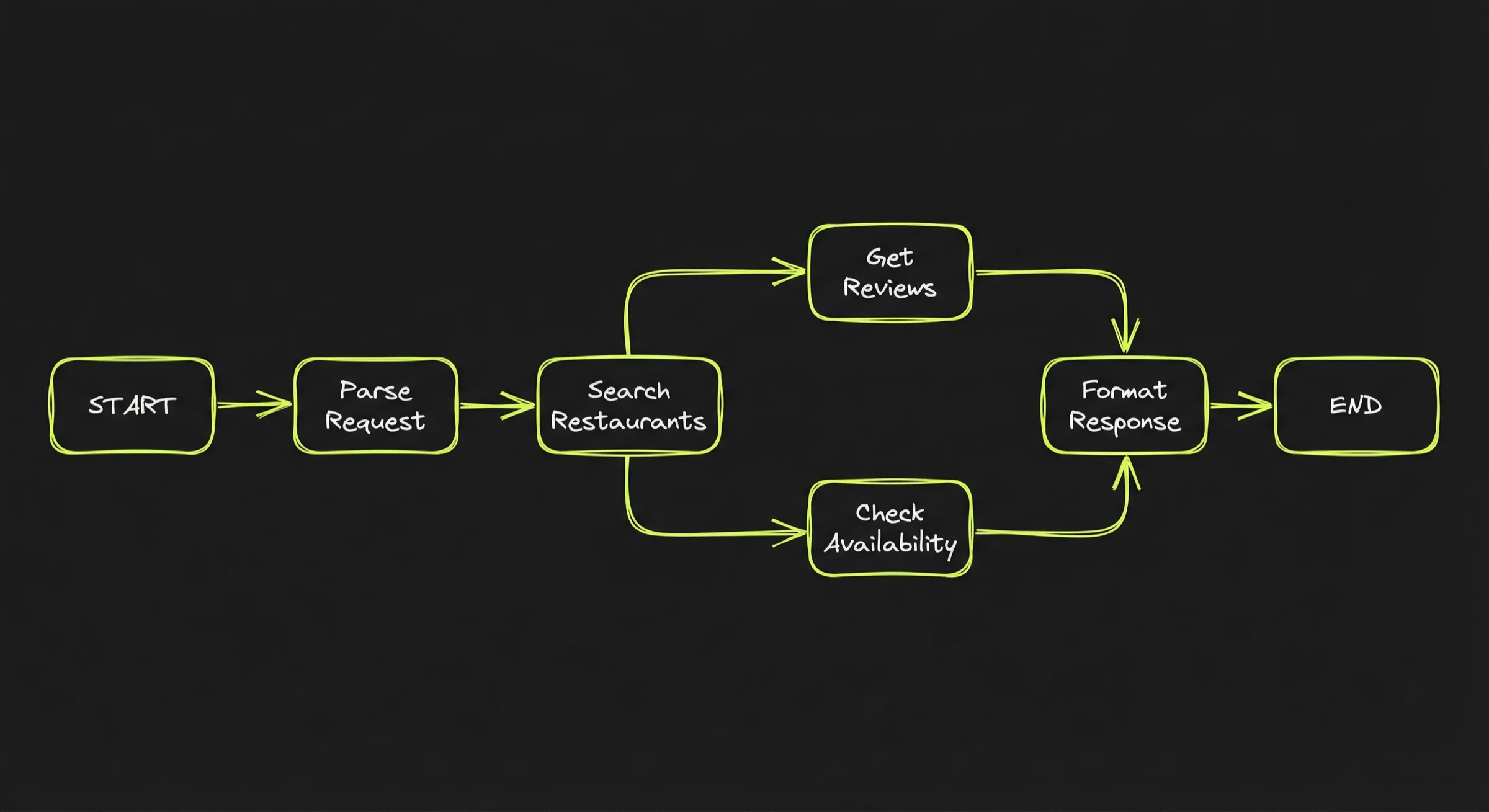

Here is schematically how the developer would implement the restaurant reservation workflow:

workflow = new Workflow()

workflow.add_step("search", search_restaurants)

workflow.add_step("get_reviews", fetch_reviews)

workflow.add_step("check_avail", check_availability)

workflow.add_step("respond", format_response)

workflow.add_route("search" → "get_reviews")

workflow.add_route("get_reviews" → "check_avail")

workflow.add_route("check_avail" → "respond")

result = workflow.run("Italian restaurant near the office, Friday, 4 people")

On top of that, the developer defines the functions for each step. For example, search_restaurants might use the LLM internally to parse search results:

function search_restaurants(query, location):

raw_results = web_search(query + " near " + location)

parsed = llm("Extract restaurant names and addresses from: " + raw_results)

return parsed

How the main orchestration frameworks compare:

- LangGraph (Python + TypeScript) was the first such framework. You wire every node and edge by hand.

- PydanticAI (Python) takes a different approach: graph transitions are defined as return type annotations on nodes, so the type checker enforces valid transitions at write-time.

- Vercel AI SDK (Typescript) started as a low-level tool loop + unified provider layer, then added agent abstractions in v5-v6 (2025).

- Mastra (Typescript) builds on top of Vercel AI SDK — it delegates model routing and tool calling to the AI SDK and adds the application layer on top (workflows, memory, evaluation).

There are other such orchestration frameworks. Cues to recognize app-driven control flows:

- Explicit stage transitions in code or config.

- Multiple different prompts or schemas.

- The app decides when to request user input.

- The model may call tools within a step, but the macro progression is app-owned.

Agent-driven control flow

With Agent-driven control flow, the agent decides what happens next.

It looks like this:

agent = Agent(

model = "claude-sonnet",

system_prompt = "You are a coding assistant. Read files, edit code,

run tests.",

tools = [read_file, edit_file, run_tests, search_codebase],

max_turns = 50

)

result = agent.run("Fix the failing test in src/auth.ts")

The agent decides:

- What to read first.

- What to edit.

- When to run tests.

- Whether to try a different approach after a failure.

- When to stop.

The orchestration moves inside the agent loop: it's not enforced by the app but left to the model's own judgment. Agent SDKs provide a "harness" that can be customized by the developer. This harness provide orchestration cues to the model to steer it towards the expected goals:

- System prompts, policies and instructions (in agent.md or similar): the rules of the road: what to do, what not to do, how to behave.

- Tools: what pre-packaged tools are available to search, fetch, edit, run commands, apply patches.

- Permissions: which tools are allowed, under what conditions, with what scoping.

- Skills: pre-packaged behaviours and assets the agent can invoke.

- Hooks / callbacks: places the host can intercept or augment behavior (logging, approvals, guardrails).

This report examines three agent SDKs that implement agent-driven control flow:

- Claude Agent SDK exposes the Claude Code engine as a library, with all the harness elements above built in.

- Pi SDK is an opinionated, minimalistic framework. Notably it can work in environments without bash or filesystem access, relying on structured tool calls instead.

- OpenCode ships as a server with an HTTP API — the harness plus a ready-made service boundary.

There are other agent-driven frameworks. Typical signs of agent-driven control flow:

- The hosting app is thin: it relays messages, enforces permissions, renders results.

- The logic lives in the harness in the form of system prompts, context files, skills and other "capabilities" that steer the agent towards the expected outcome.

What to keep in mind

Three points from this section:

- Orchestration is about who decides what happens next. In app-driven control flow, the developer defines the graph. In agent-driven control flow, the model decides based on goals, tools, and prompts. Both are valid — the choice depends on how predictable the task is.

- Orchestration frameworks handle the plumbing. Whether you choose app-driven or agent-driven, frameworks give you the loop, tool wiring, and error handling so you can focus on the logic — not on parsing JSON and managing retries.

- In agent-driven systems, the harness replaces the graph. The agent has more freedom, but it is not unsupervised. System prompts, permissions, skills, and hooks are what steer it. The harness is the developer's control surface when there is no explicit workflow.

- Orchestration libraries are adding agent-driven control flow: LangChain Deep Agents and PydanticAI both list deep agents as a first-class pattern.